Tuesday, March 16, 2010

How to Trust the Cloud

Monday, February 16, 2009

Media Temple goes down, provides a nice case study for downtime transparency

Earlier today we saw Media Temple experience intermittent downtime over the course of an hour. The first tweet showed up around 8am PST noting the downtime. At 9:06am Media Temple provided a short message confirming the outage:

Earlier today we saw Media Temple experience intermittent downtime over the course of an hour. The first tweet showed up around 8am PST noting the downtime. At 9:06am Media Temple provided a short message confirming the outage:So far, not too bad. Though note the broken rule in hosting your status page in the same location as your service. Lesson #1: Host your status page offsite. Let's keep moving with the timeline....At ~8:30AM Pacific Time we started experiencing networking issues at our El Segundo Data Center. We are working closely with them to determine the cause of these issues and will report any findings as they become available.

At this time we appear to be back fully. The tardiness of this update is a direct result of these networking issues.

About the same time the blog post went up, a Twitter message by @mt_monitor pointed to the official status update. Great to see that they actually use Twitter to communicate with their users, and judging by the 360 followers, I think this was a smart way to spread the news. On the other hand, this was the only Twitter update from Media Temple throughout the entire incident, which is strange. And it looks like some users were still in the dark for a bit too long. I was also surprised that the @mediatemple feed made no mention of this. Maybe they have a good reason to keep these separate? Looking at the conversation on Twitter, feels like most people by default use the @mediatemple label. Lesson #2: Don't confuse your users by splitting your Twitter identity.

From this point till about 9:40am PST, users were stuck wondering what was going on:

A few select tweets show us what users were thinking. The conversation on Twitter goes on for about 30 pages, or over 450 tweets from users wondering what the heck was going on.

Finally at 9:40am, Media Temple released their findings:

Now that everything is back up and users are "happy", what else can we learn from this experience?Our engineers have spoken with the engineers at our El Segundo Data Center (EL-IDC3). Here are their findings:

ASN number 47868 was broadcasting invalid BGP data that caused our routers, and a lot of other routers on the internet, to reboot. This invalid BGP data exploited a software bug in our routers. We have applied filters to prevent us from receiving this invalid data.

At this time they are in contact with their vendors to see if there is a firmware update that will address this. You can expect to see network delays and small outages across the internet as other providers try to address this same issue.

Lessons

- Host your status page offsite. (covered above)

- Don't confuse your users by splitting your Twitter identity. (covered above)

- Some transparency is better then no transparency. The basic status message helped calm people down and reduce support calls.

- There was a huge opportunity here for Media Temple to use the tools of social media (e.g. Twitter/Blogging) as a two-way communication channel. Instead, Media Temple used both their blog and Twitter as a broadcast mechanism. I guarantee that if there were just a few more updates throughout the downtime period the tone of the conversation on Twitter would have been much more positive. Moreover, the trust in the service would have been damaged less severely if users were not in the dark for so long.

- A health status dashboard would have been very effective in providing information to the public beyond the basic "we are looking into it" status update. Without any work on the part of Media Temple during the event, its users would have been able to better understand the scope of the event, and know instantly whether or not it was still a problem. It would have been extremely powerful when combined with lesson 4, if a representative on Twitter simply pointed users complaining of the downtime to the status page.

- The power of Twitter as a mechanism for determining whether a service is down (or whether it is just you), and in spreading news across the world in a matter of minutes, again proves itself.

What every online service can learn from Ma.gnolia's experience

A lot has been said about the problem of trust in the Cloud. Most recently, Ma.gnolia, a social bookmarking service, lost all of its customers data and is in the process of rebuilding (both the service and the data). The naive take-away from this event is to use this as further evidence that the Cloud cannot be trusted. That we're setting ourselves up for disaster down the road with every SaaS service out there. I see this differently. I see this as a key opportunity for the industry to learn from this experience, and to mature. Both through technology (the obvious stuff) and through transparency (the not-so-obvious stuff). Ma.gnolia must be doing something right if the community has been working diligently and collaboratively in restoring the lost data, while waiting for the service to come back online and to use it again.

A lot has been said about the problem of trust in the Cloud. Most recently, Ma.gnolia, a social bookmarking service, lost all of its customers data and is in the process of rebuilding (both the service and the data). The naive take-away from this event is to use this as further evidence that the Cloud cannot be trusted. That we're setting ourselves up for disaster down the road with every SaaS service out there. I see this differently. I see this as a key opportunity for the industry to learn from this experience, and to mature. Both through technology (the obvious stuff) and through transparency (the not-so-obvious stuff). Ma.gnolia must be doing something right if the community has been working diligently and collaboratively in restoring the lost data, while waiting for the service to come back online and to use it again.What can we learn from Ma.gnolia's experience?

Watching the founder Larry Halff explain the situation provides us with some clear technologically oriented lessons:

- Test your backups

- Have a good version based backup system in place

- Outsource your IT infrastructure as much as possible (e.g. AWS, AppEngine, etc.)

For those that aren't interested in watching 12 minutes of video, here are the main points:

- Disclose what your infrastructure is and let users decide if they trust it

- Provide insight into your backup system

- Create a personal relationship with your users where possible

- Don't wait for your service to have to go through this experience, learn from events like this

- Not mentioned, but clearly communicate with your community openly and honestly.

Thursday, January 8, 2009

Salesforce.com down for over 30 minutes, and what we can learn from it

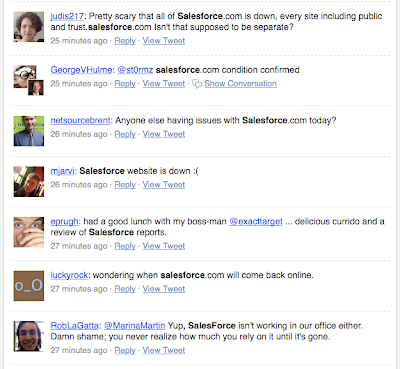

Update: Again, Twitter ends up being the best place to confirm a problem and get updates across the world:

Update 2: Salesforce has posted an explanation of what led to the downtime (

Update 2: Salesforce has posted an explanation of what led to the downtime (from trust.salesforce.com):

"6:51 pm PST : Service disruption for all instances - resolvedUpdate 3: Lots of coverage of this event all over the web. All of the coverage focuses on the downtime itself, how unacceptable it is, and bad this makes the cloud look. That's all crap. Everything fails. In-house apps more-so then anything. We can't avoid downtime. What we can avoid is the communication during and after the event, to avoid situations like this:

Starting at 20:39 UTC, a core network device failed due to memory allocation errors. The failure caused it to stop passing data but did not properly trigger a graceful fail over to the redundant system as the memory allocation errors where present on the failover system as well. This resulted in a full service failure for all instances. Salesforce.com had to initiate manual recovery steps to bring the service back up.

The manual recovery steps was completed at 21:17 UTC restoring most services except for AP0 and NA3 search indexing. Search of existing data would work but new data would not be indexed for searching.

Emergency maintenance was performed at 23:24 UTC to restore search indexing for AP0 and NA3 and the implementation of a work-around for the memory allocation error.

While we are confident the root cause has been addressed by the work-around the Salesforce.com technology team will continue to work with hardware vendors to fully detail the root cause and identify if further patching or fixes will be needed.

Further updates will be available as the work progresses."

"Salesforce, the 800-pound gorilla in the software-as-a-service jungle, was unreachable for the better part of an hour, beginning around noon California time. Customers who tried to access their accounts alternately were unable to reach the site at all or received an error message when trying to log in.That's where SaaS providers need to focus! Create lines of communication, open the kimono, and let the rays of transparency shine through. It's completely in your control.Even the company's highly touted public health dashboard was also out of commission. That prompted a flurry of tweets on Twitter from customers wondering if they were the only ones unable to reach the site."